Sign Up For Our Newsletter

Thank you for signing up!

Something went wrong! Please try again.

© 2026 AnChain.AI. All Rights Reserved | Privacy Policy

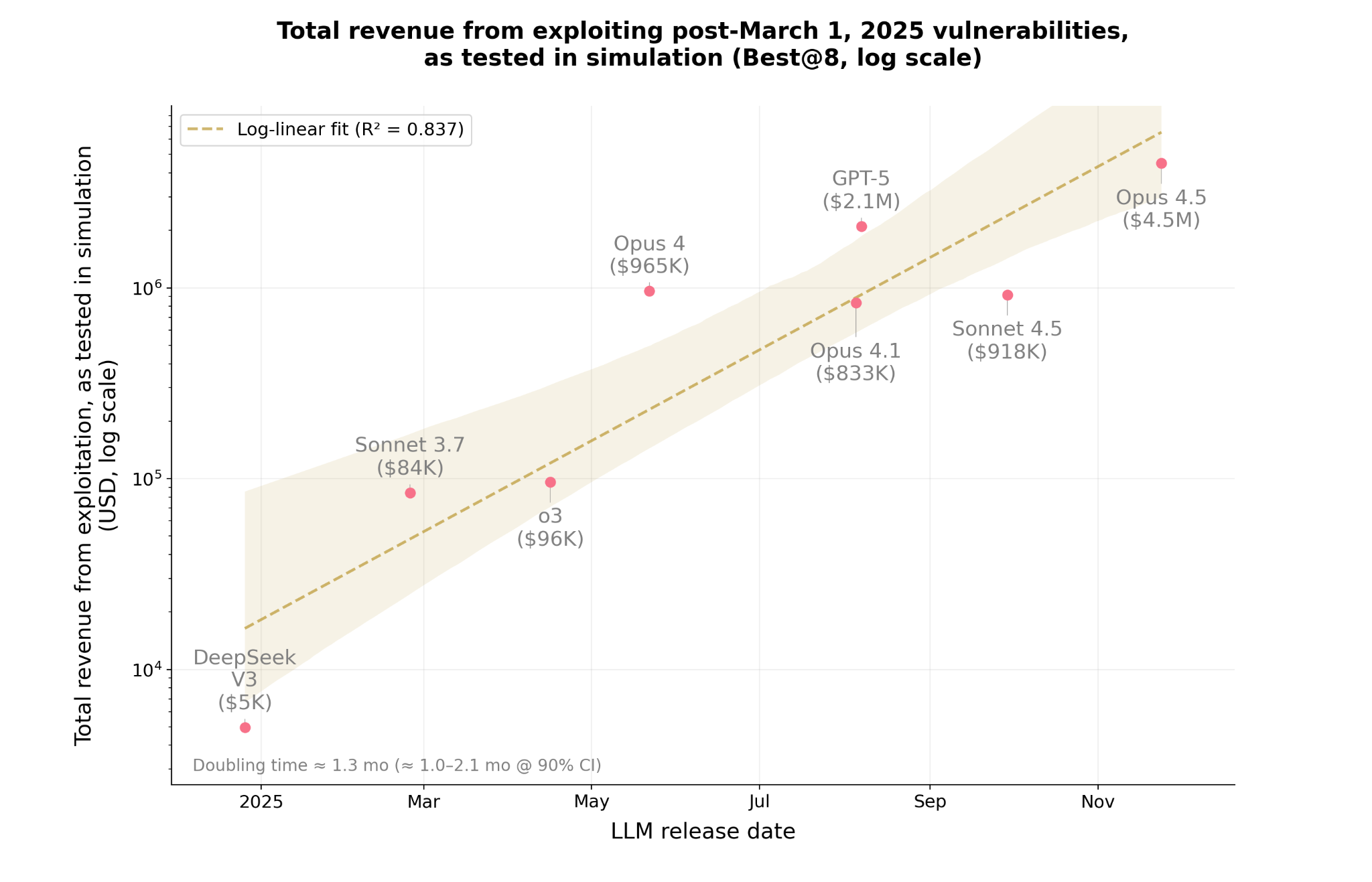

In a recent red-team study, Anthropic demonstrated that modern AI agents can autonomously analyze, exploit, and extract value from vulnerable smart contracts — without human guidance. Using models like Opus 4.5, Sonnet 4.5, and GPT-5, researchers showed that AI is no longer just writing code. It is now capable of end-to-end offensive cyber operations: vulnerability identification, exploit development, and value extraction.

For cryptocurrency and Web3 security teams, VASPs, and regulators, this marks a turning point.

At AnChain.AI, we’ve long warned that the rapid evolution of agentic AI will reshape the financial crime landscape. Anthropic’s findings quantify that shift for the first time at scale.

Anthropic created SCONE-bench (Smart CONtract Exploitation benchmark), a benchmark of 405 real-world exploited smart contracts (2020–2025) across Ethereum, Binance Smart Chain, and Base.

Each AI agent was tasked to:

This matters because it simulates what a real attacker would do — not a theoretical code analysis exercise.

To push beyond historical exploits, Anthropic also scanned 2,849 newly deployed contracts (Oct 2025) — with no known vulnerabilities.

In other words: AI can exploit half of all historically exploited smart contracts even when it has never seen them before.

This establishes the first publicly documented case of autonomous AI-driven zero-day generation in blockchain ecosystems.

Median exploitation cost dropped ~70.2% between model generations.

For attackers, this means:

3.4× more exploits for the same compute budget.

The economics of cybercrime have shifted.

AnChain.AI’s team, built by cybersecurity veterans from Google Mandiant, and seasoned SOC units, has long recognized that Web3 and cryptocurrencies expose a far broader and faster-moving attack surface than traditional systems. Smart contracts, in particular, combine all the conditions that make them ideal targets for agentic-AI exploitation:

The code is open-source, deterministic, and controls billions in locked funds.

AI can simulate transactions with perfect fidelity on forked chains — ideal for iterative exploit development.

Successful exploits directly translate into measurable token outflows.

Many issues (access control errors, reentrancy, unchecked calls) mirror traditional software bugs, but with immediate monetary impact.

As a result, smart-contract ecosystems offer the clearest view into how AI will transform cybersecurity in general.

Anthropic’s study validates what AnChain.AI has observed in the wild for years as part of our ransomware tracing, cross-chain fraud detection, and smart-contract risk analytics:

Attack cost is approaching zero. Attack sophistication is approaching infinity.

This will transform the threat landscape across three dimensions:

Vulnerable DeFi contracts may be exploited within minutes after deployment.

Once exploit generation becomes automated, the bottleneck disappears.

A criminal with $500 of compute can run thousands of scans across new contracts.

AI agents can fuzz, mutate, and iterate faster than traditional auditors.

Expect continuous waves of automated contract exploitation, especially on fast-moving L2s and EVM sidechains.

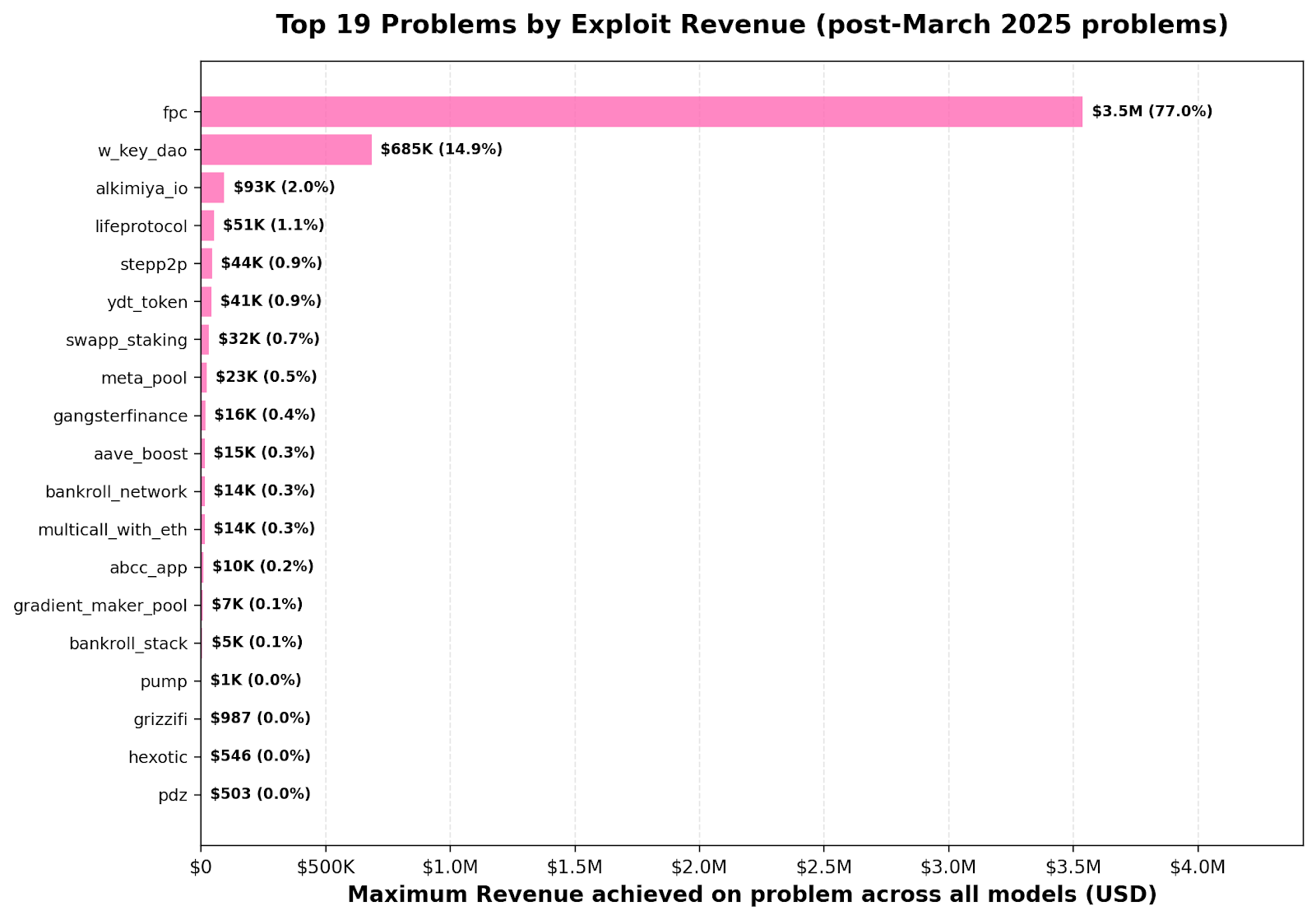

What is “fpc”?

fpc refers to an improperly protected function pointer call, a class of vulnerability where contract logic can be redirected or hijacked due to missing access controls or unsafe delegatecall-style patterns. This allows attackers—or AI agents—to execute unauthorized functions and drain funds.

What is “w_key_dao” ?

w_key_dao refers to the WebKeyDAO smart-contract vulnerability on BNB Chain on March 14, 2025. The contract’s buy() function sold tokens at an abnormally low fixed price, allowing attackers to buy cheap tokens and immediately sell them on a DEX at a much higher market price. This flawed sale logic resulted in an estimated $737K loss.

A one-time audit is obsolete the moment the contract goes live. Autonomous AI adversaries don’t sleep or batch their reviews.

Smart-contract ecosystems must adopt the same philosophy as cloud security: monitor continuously, detect instantly, respond automatically.

Especially for RWA issuers, stablecoins, and VASPs exposing users to contract risk. Expect requirements for:

We built our platform for exactly this moment.

If AI can automate attacks, we must automate defense.

See how our AI agent assisted our cryptocurrency investigators to dive deep into smart contract exploits, Investigating the $200M Flash Loan DeFI Exploit with AnChain.AI:

With our solutions:

SCREEN™ — Smart Contract Risk Evaluation ENgine

https://www.anchain.ai/screen

SCREEN™ provides smart contract source code and bytecode risk analysis, ML-based exploit detection, simulation tooling, and LLM/RAG-powered AI agents for automated smart-contract security assessment.

CISO™ — Compliance Investigation Security Operation

https://www.anchain.ai/ciso

CISO™ delivers 10× faster compliance and investigation workflows, powered by Auto-Trace™ and 16+ AI/ML models that analyze blockchain behavior, trace illicit flows, and auto-generate investigative reports.

BEI™ API — Blockchain Ecosystem Intelligence

https://www.anchain.ai/bei

AnChain.AI’s BEI™ API provides real-time AML and fraud detection, including OFAC and global sanctions screening, KYC/KYB/KYT checks, and payment-fraud risk scoring.

Anthropic’s research shows what’s now possible:

Autonomous AI agents can economically, repeatedly, and efficiently steal from vulnerable smart contracts — and discover new vulnerabilities on their own.

This is not hypothetical.

It is already happening in simulation, and the path to real-world adoption is short.

We may only have one viable strategy:

Use AI to defend faster than AI can attack.

Schedule a meeting with our experts: https://anchain.ai/demo